Agentic AI is on everyone's lips - a short primer

“Agentic AI” generally means AI systems composed of autonomous agents that pursue goals, plan multi‑step actions, use tools/APIs, and adapt with limited supervision. In practice, these agents blend reasoning (LLMs) with execution (tool calls, services, workflows) to complete tasks end‑to‑end. As an example, they are gathering facts, making decisions, and then doing things like booking, filing, or transforming data.

What makes the trend feel new is the maturation of large language models as planners and routers, plus frameworks that let agents run inside reliable, observable orchestrations. That combination pushes beyond single‑prompt “chat” into goal‑seeking systems that coordinate humans, rules, and tools across long‑running processes.

Haven't we built something like this before? Spoiler: yes, hmm maybe ... at least a subset - just without the label

Looking back to 2010, Vidispine first introduced a workflow engine in a highly distributed system - VPMS, the previous generation of VidiNet - where modules, previously referred to as “workers” rather than “agents”, performed their tasks according to their defined logic. This integration enabled us to do the following:

-

Content‑oriented workflows with defined activities and dependencies,

-

Runtime decisions driven by technical/semantic metadata (“flexible metadata model”),

-

Parallel and dependent steps (e.g. LoRes proxy or keyframe generation starting once the first bytes arrived)

-

Operational visibility (workflow design, versioning, tracking, restart/reactivation).

That stack empowered engineers to solve complex, high‑variance use cases from watch‑folder ingest with conditional transcoding to parallel proxy workflows. And that with configuration, not coding! Since then, we have orchestrated and scaled hundreds of worker/agent types for a wide variety of enterprise customers.

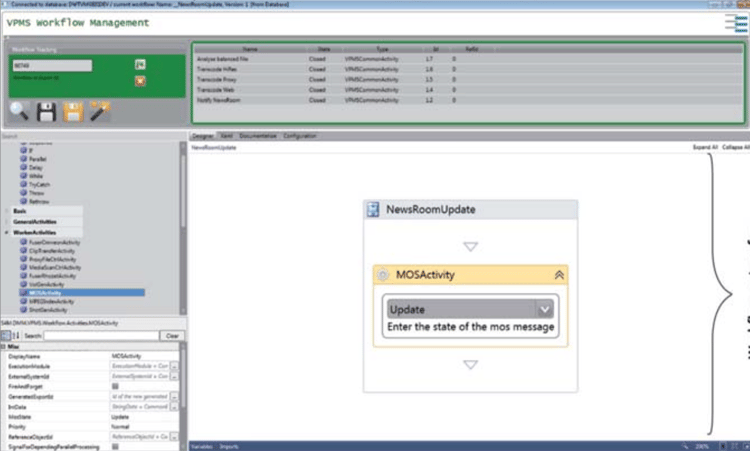

To briefly revisit the past, let’s take a short moment to review the two generations of VidiNet's workflow management. What looks a little outdated from a UI perspective was a complete integration of a pure workflow engine under the hood of VPMS and was quite innovative and powerful for its time. For those interested in history and details, there is still an article available on this topic, albeit in German and for Members of FKTG (Integration von WF 4.0 | FKTG - Fernseh- und Kinotechnische Gesellschaft) only.

Workflow Designer in VPMS (2010)

And if we fast forward to present day, products like VidiFlow (within the VidiNet ecosystem) continue this lineage with BPMN 2.0 compliant workflow modeling, decision and rule engine, agent/microservice connectors for third‑party systems, and a monitoring UI - all core tenets of deterministic, enterprise‑grade orchestration. One thing that’s often overlooked is that, beyond the simple demo examples, enterprise use cases usually require a lot of domain- and business-specific logic. The engine needs to support this logic in a way that enables both the ability to design and reliably execute workflows.

-20251024-093734.png?width=750&height=353&name=Image%20(1)-20251024-093734.png)

Example of a complex workflow execution in VidiFlow

What's actually different now?

Deterministic workflows, like BPMN, DMN, or state machines, follow clearly defined paths. They include rules for timeouts, retries, and error handling. These workflows are great for tasks that need to be repeatable and easy to monitor. They are especially useful in areas like broadcast chains, where compliance and recovery are important.

Agentic AI adds flexible, non-deterministic steps using large language models and tools. It can route tasks dynamically, summarize content, classify data, plan actions, or make decisions when fixed rules don’t work well.

The modern approach combines both ideas. Agents are placed inside deterministic workflows. This gives you flexibility while still keeping things traceable and reliable. Let's have a look at what those kinds of agents are and how they are defined.

Five Types of Agents (AI Theory)

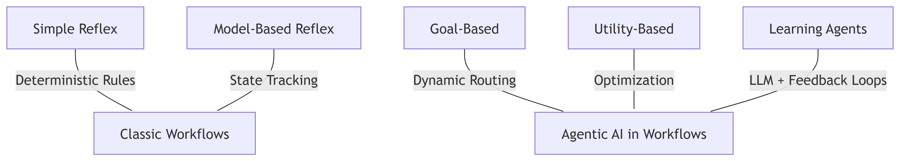

Agentic AI discussions often borrow from Russell & Norvig’s agent taxonomy:

-

Simple Reflex Agents

-

Act on current percepts only, using condition–action rules.

-

Analogy: Deterministic workflows in broadcast (e.g., “If QC fails → transcode”) mirror this: decisions based on explicit metadata/state, no learning or planning.

-

-

Model-Based Reflex Agents

-

Maintain an internal state to handle partially observable environments.

-

Analogy: Workflows that track job state across retries or conditional branches.

-

-

Goal-Based Agents

-

Choose actions by considering goals and possible outcomes.

-

Analogy: AI-driven routing in ingest pipelines (e.g., “Choose best transcode path to meet SLA”).

-

-

Utility-Based Agents

-

Optimize for preferences or utility functions, not just goal satisfaction.

-

Analogy: Cost-aware resource allocation in cloud-based media workflows.

-

-

Learning Agents

-

Improve performance over time by learning from experience.

-

Analogy: LLM-powered enrichment that adapts prompts or fine-tunes based on feedback.

-

Pros of autonomous decision-making

Autonomous agents powered by LLMs offer several advantages. They are flexible and can handle messy or unexpected situations, such as different formats or vendor quirks, without relying on complex rules. Agents can also sort issues ahead of time and only escalate the exceptions, which improves speed and reduces manual effort. Another benefit is faster iteration, where business teams can update prompts and policies more quickly than changing code.

However, there are also challenges. LLMs can be unpredictable, so it’s important to use guardrails like schemas, validators, and clear steps for each agent. Error handling is more difficult compared to traditional systems, which rely on retries and fallback mechanisms. These patterns should still be part of the overall workflow. Compliance and auditability also matter. Deterministic engines help track versions and actions, so it’s best to use them to manage and contain agent behavior.

What's next in the series?

So far, we’ve focused on general definitions and concepts around Agentic AI. In the next article, we’ll dive into broadcast-specific use cases and explore where non-deterministic agents can offer real advantages.

Stay tuned and greetings from Ulrich and Ralf!

Ulrich Ening is a media-technology product manager based in Cologne, bringing extensive experience in broadcast, IT, and workflow management from various international projects. At Vidispine, he focuses on developing innovative, user-centric media solutions while integrating AI-driven tools to optimize workflows and enhance user experiences.